TL;DR. Not far!

This was a question I first answered on Quora that I decided to improve and add some personal thoughts.

To support the above claim, let us consider for instance a field where mathematics have been proven immensely successful:

Physics!

This is the reason why Mathematics was subsequently dubbed the language of nature and convinced Eugene Wigner to write his famous paper “The Unreasonable Effectiveness of Mathematics in the Natural Sciences” in 1960. It is important to stress here that by “Natural Sciences”, Eugene meant “Physics” and not “Biological Sciences”.

Not only the derived mathematical models were able to accurately reproduce experimental results, they were also able to make predictions that could be tested and verified using an experimental setup (see Newton’s Flaming Sword).

Let us take the example of Newton’s mechanics. Newton’s mechanics was able to accurately represent the trajectories of planets and other cosmic objects. However, predictions are sometimes incorrect which emphasizes, if the experimental measurements are correct, that the theory is not fully correct and physicists get excited. They constantly try to disprove existing theories in order to discover new physics. For instance, Newtonian physics was not able to predict the precession of Mercury (precession is a change in the orientation of the rotational axis of a rotating body). Einstein’s general relativity theory has been able to predict that precession correctly. Similar examples in physics also apply to the photoelectric effect as well as all the quantum effects that were not predicted by classical theories such as electromagnetism. Modeling is not only restricted to Science where the goal is to provide a good model for reality which can also lead to accurate predictions. It also has direct implications in Engineering as those predictive models can be exploited to build accurate simulators that can help engineers designing complex multiphysics systems. This allows Engineers to first build a model of their system which can be validated by simulations before starting building the process itself and performing experimental tests. Just imagine having to build new planes, spacecraft, industrial machines, etc. without such tools nowadays. Numerical simulations and validation have become an integral part of the modern design process.

Models which are not necessarily predictive may also hint that the assumptions are incorrect. In the 19-th century, Uranus’ orbit was not accurately explained by Newtonian mechanics and the laws of Kepler. This is the reason why Urbain Le Verrier postulated the existence of another celestial body which, when included in the model, would lead to the correct predictions in Uranus’ trajectory. This led to the discovery of Netpune solely based on a mathematical model! That was not out of sheer luck, that was made possible because Newton mechanics was and is still an accurate model in the first place.

Devising predictive models in Physics was made possible because our universe seems to obey strict laws which we have been trying to (re)discover. “The Book of Nature is written in the language of mathematics”, often incorrectly attributed to Galileo Galilei, summarizes this pretty well. Recent examples are the successful theories of General Relativity and Quantum Mechanics.

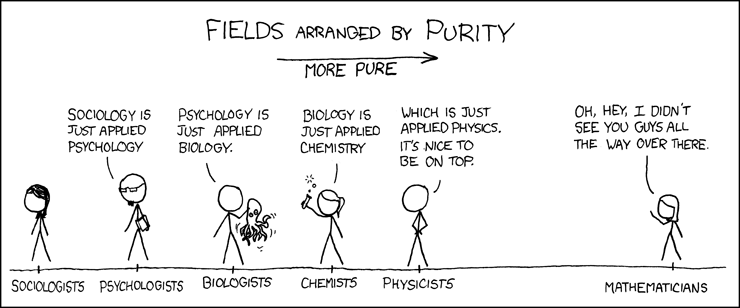

Biological systems vastly differ from those studied by physicists. In fact, as XKCD (always) rightly pointed out, biology is applied chemistry, which is itself applied physics. But all jokes aside, biology is an extremely complex field that was built up on an evolutionary process which is still quite poorly understood and a lot of effort is still needed to fully understand it.

Quite recently, researchers have been trying to mix biology and Mathematics as an attempt to gain a better understanding on what is actually going on, and to provide new insights into already known problems. This field is Systems Biology and is an attempt at achieving the same feat as in physics: putting biology on a solid mathematical ground from which we can make predictions on experimental outcomes. But there is a catch! In fact, many catches!

Many modeling techniques can be applied to represent a biological system and it is beyond the purpose of this post to go into any great detail but many possibilities exist depending on the desired degree of accuracy and the underlying assumptions considered. However, it is very important to mention the following caveats which are pretty much common to all modeling approaches:

- Firstly, we do not know the biology perfectly. So, in the end, we model something that we do not completely comprehend. That’s already a big problem. This poor knowledge as a dramatic impact in fields such as Synthetic Biology when one wants to implement synthetic circuits inside cells to execute some desired functions. While Newton certainly did not know what gravity really was (and we still do not really know), he was still able to come up with simple formulas that could describe trajectories on a macroscopic scale without knowing what was going on at a microscopic level. It seems that this feat is difficult to achieve in biology, except, maybe, in certain specific cases.

- Biology or living organisms are an emerging property of chemistry and physics and is extremely complex as it involves a lot of molecules, structures and functions. As a result, mechanistic models can get very complex very quickly.

- In order to reduce the complexity we make strong approximations. For instance, when you model a simple biological network, we implicitly isolate or decouple it from the cellular environment, that is, all its possible interactions with the rest of the cell are often neglected. The problem is that this isolation is not a correct working hypothesis because some cellular resources are shared between circuits such as amino acids, or ribosomes, and certain proteins may have multiple roles in the cell, the environment is also constantly changing, etc.

- Even assuming that the network is sufficiently disconnected from the cell (something we call orthogonality) there will still be an infinite number of possible model structures that can be used. Of course, some of them are more plausible than others but there is, in general, no rigorous way to pick one over the others. One reason for that is that, unlike in Physics, we do not have any laws or fundamental principles we can rely on.

- Now that we have the model, we face the problem of finding the right value of the parameters. This can be done through the use of parameter inference algorithms such as Bayesian estimation based on measured data. The problem is twofold here. Firstly, data is scarce, expensive, time-consuming to obtain, and not necessarily of very good quality (very noisy, lots of artefacts, etc.). We are also very limited in terms of what we can simultaneously measure inside a cell in a non-invasive and non-destructive way. Secondly, the inference algorithms are quite complex numerically and do not scale well with the size of the system. We are facing a huge challenge here since most algorithms suffers the curse of dimensionality while biological networks are highly dimensional in nature. Good luck!

Now that we described the process of obtaining a model for a given biological network, we can see that we have problems at multiple levels:

- Framework level. There does not seem to exist a framework that emerged from biology in the same was that differential calculus emerges from the need of describing gravitation using Newtonian mechanics. So far, people are just applying what they know from Physics, Mathematics, Computer Science, etc. onto Biology (top-down approach) while there is a need for a pure bottom-up approach that may lead to or require new mathematics. The word “onto” is especially meaningful here many studies tend to force those approaches to biological problems/systems, resulting in a overall unnatural approach.

- Modeling level. Even if we stick to a modeling paradigm, we will face issues in terms of model selection.

- Inference level. Many models can not be fully identified due to lack of identifiability or lack of data. Moreover, the indirect nature of biological measurements makes it so that what we measure is compatible with many different underlying models, and so there are a broad array of hidden assumptions about data that get swept under the rug.

- Predictability level. Models obtained this way will unlikely to be predictive.

What we get as a result is a mess, sadly, a field with no identity as there is no real common ground between researcher, no common terminology nor clear definitions. However, putting that aside since the field is young and we are probably waiting for our Maxwell or Gibbs, we should be able to do about the aforementioned issues so that, at some point, some genuine Systems Biology field with its own concepts and principles will emerge. In the meantime our goal will be build models that are capable of making accurate quantitative predictions. Unfortunately, this is currently out of reach as this would require to have a model that is structurally correct with the correct parameter values.

Taking a step back, we can start by simply asking our models to make qualitative predictions, which simplifies the problem a little bit. By doing so, we would not really care of the exactness or approximate values of the state of the system, but we would like to predict a certain behavior such as oscillations, bistability, etc. Unfortunately, very few theoretically-driven results have been experimentally validated. At least much less than in physics. I have few examples where theory led to experimental testing and validation (the list is non-exhaustive, fell free to leave additional suggestions in the comments or by email):

- The paper “Modeling the chemotactic response of Escherichia coli to time-varying stimuli” by Tu, Shimizu, and Berg proposed a model for bacterial chemotaxis. This was later followed by the paper “Fold-change detection and scalar symmetry of sensory input fields” by Shoval, Goentoro, Hart, Mayo, Sontag, and Alon which predicted that this model had the Fold Change Detection property. This was experimentally shown, for instance, in “Response rescaling in bacterial chemotaxis” by Lazova, Ahmed, Bellomo, Stocker, and Shimizu.

- The paper “Antithetic Integral Feedback Ensures Robust Perfect Adaptation in Noisy Biomolecular Networks” by Briat, Gupta and Khammash proposed a genetic regulatory motif for perfect adaptation in cellular organisms. This was experimentally tested and validated in “A universal biomolecular integral feedback controller for robust perfect adaptation” by Aoki, Lillacci, Gupta, Baumschlager, Schweingruber, and Khammash.

The predictions were essentially qualitative, but this is the best we can do in biology at the moment. As opposed to physics, this is still antiquity. Why? Because those predictions have been made on very simple biological systems while a simple bacteria like E. coli has 4,401 genes encoding 116 RNAs and 4,616 proteins, that establish in all the signaling, stress responses, regulation mechanisms, and more.

The main difficulty is that biological systems are complex systems, which are far from being completely understood. The complexity of a cell like E. coli is immense and having a model that can accurately capture its entire behavior in all possible environmental scenarios is out of reach. Unfortunately, for us living organisms have developed based on an evolutionary process where everything is connected to everything and it is difficult to identify subsystems that can be fully analyzed separately and independently from the others. In other words, the reductionist approach does not seem to apply to biological systems. One, therefore, needs to consider a holistic approach, but this leads to insanely complicated problems which are both mathematically and computationally out of reach.

I finish this post on a quote from Israel Gelfand:

“Eugene Wigner wrote a famous essay on the unreasonable effectiveness of mathematics in natural sciences. He meant physics, of course. There is only one thing which is more unreasonable than the unreasonable effectiveness of mathematics in physics, and this is the unreasonable ineffectiveness of mathematics in biology.”

Even though that was a while ago, I could not agree more. There is still a long way before mathematics is ready to deal with realistic biological systems.

As an epilogue, we may ask for the reason why we do include models in biology papers. Why keeping them if they are unable to make reliable predictions regarding a certain behavior that was not previously observed using experimental data, or even unable to predict the presence of an unknown and missing model component that could better explain the data?

I think the reason is not scientific, it is due to some sort of social conditioning.

Social conditioning is the sociological process of training individuals in a society to respond in a manner generally approved by the society in general and peer groups within society.

To analyze this, just take some analogy: Ties or Fashion! I am not sure where ties are coming from, there is probably a cultural reason for it but let’s ignore this and assume that one day, a random guy, decides to go to an admittedly fancy party while wearing a tie, no one had ever done that before. The bouncer looks at it, shrugs and lets the guy in. Inside some guests appreciate this novelty, some others don’t, and the remaining ones do not really mind. But for sure it had attracted attention and curiosity.

The next few parties some other people decide to wear a tie, of course, a different one. Even bouncers start wearing some. Until at some point, ties become some sort of norm: everyone implicitly expects you to wear one when coming to a party and no one will let you in without one. But no one really knows why. This is what we call “social conditioning” and this the reason why reviewers will keep asking for models in experimental papers, which is why authors will keep putting models in their papers.

As Noah Olsman rightly pointed out to me is that the issue is also not only that people sort of compulsively include models, but they are also not asked to really prove that their models are accurate.

It is important for the future of the fields of Systems Biology and Synthetic Biology to refocus on both the design of reliable models which having a predictive power but also on circuits that will behave in a predictable way. How can we devise such models for systems which are intrinsically ill-behaved or fragile (i.e. non-robust)? In this regard, we should not be allowed to just publish data from a single circuit architecture with a single set of parameters in synthetic biology If you ca not show that your circuit behaves in a predictable way as you tune parameter values or vary the order and orientation of the underlying genes, then who knows if the results are at all generalizable! (Thanks again Noah).

Thanks for reading!

Feel free to post some comments and share. For any inputs, please contact me blog@briat.info.

Acknowledgments

Many thanks to (not in any specific order) Noah Olsman, Timothy Frei, Yili Qian, Maurice Filo, Ankit Gupta, Christian Cuba Samaniego for the comments that led to the current version of this post.

Very thought-provoking and informative article!

On the role of mathematical modeling in biology, I highly recommend this article by Jeremy Gunawardena: Models in biology: ‘accurate descriptions of our pathetic thinking’ https://bmcbiol.biomedcentral.com/articles/10.1186/1741-7007-12-29

Very interesting overview of key aspects and crucial questions for predictive modelling in biology. There is so much to do to demystify biology and make it a quantitative engineering field.

Thanks for quoting Israel Gel’fand. He had the next-door office to mine for about 20 years at Rutgers. The interaction that I remember the most is when I told him that I was becoming interested in mathematical biology. He replied that there is good mathematics, and there is good biology, but no such thing as “mathematical biology”.